Facebook algorithms

are leading internet

paedophiles to children

SvD and Aftonbladet have revealed that tens of thousands of Facebook users are members of Facebook groups centred around the rape of children. Also, Facebook’s own algorithms encourage Swedish men to contact young girls in Asia.

The police know of 15,000 Swedes who have downloaded pictures and videos involving the sexual molestation of children – on open websites – in the last year alone. According to the police, this figure is only the tip of the iceberg. The Swedish Police Authority’s IT Crime Unit, part of the National Operations Department (Noa), states that the Darknet and encrypted communications are the most common ways of sharing abusive materials.

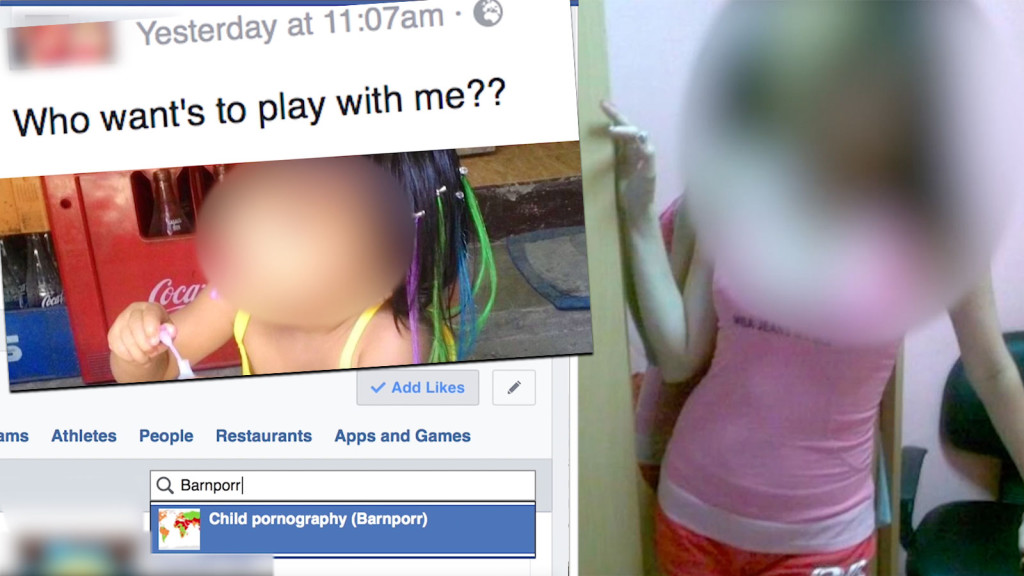

But we can now reveal that the world’s most used social network, Facebook, is also used as an interface for those who want to watch or offer abuse. We have also seen that in some cases, by the way the algorithms work, Facebook encourages Swedish men to contact young girls in Asia.

In groups with ambiguous names that can easily be misinterpreted as victim support groups, members share experiences, links and sometimes even pictures. They goad each other on to share pictures of family members. Some post their numbers for various encrypted apps and ask people to join them in more secret forums, where they can chat and share abusive material.

In our review, we created and used several fake Facebook accounts, using these profiles to enter the closed groups. But it was in a fully open group that we saw a picture of a child being raped. The picture had received “likes” from several men. Someone had commented, “this is so beautiful.”

Members encourage each other to share pictures of real abuse. The administrator of one group has issued a warning: “if they are faked, then you will be expelled.”

Many post their numbers for various encrypted apps and ask people to join them in more secret forums, where they can chat and share pictures and videos.

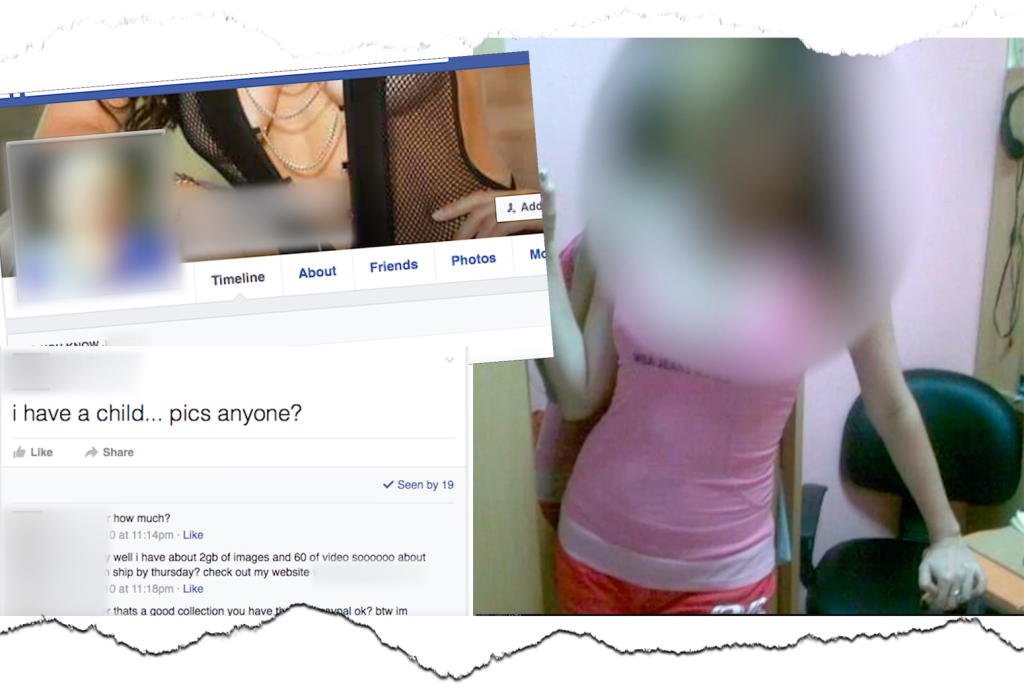

“I’ve got a kid. Is anyone interested in pictures?,” one man wrote.

We filed a police report the same day.

At the local police station on Kungsholmen in Stockholm, the investigator couldn’t accept a link directly by email. We needed to email the link to a different entity that would then forward it to the investigator.

“This is difficult to handle, as we’re dealing with Facebook. It’s so large, and we don’t know from which countries the users behind the accounts are coming.”

The investigator received our report, which was classified as “child pornography crime”.

The following day, Facebook had removed the drawings and all pictures from the group, except those depicting people who appeared to be of age. The picture of girls with clothes on was still there.

We clicked on “Report Photo” to notify Facebook, and within a few hours, we got an automatic reply stating that “we are sorry that you felt uncomfortable,” but that the picture “doesn’t fall within the scope of their policy,” and would therefore not be removed.

When we contacted Facebook, our call was passed around from person to person. No one wanted to give an interview.

“As soon as we received your documentation, we investigated the matter, and immediately removed several posts, profiles and groups. We take the security of our users very seriously,” states Peter Münster, Corporate Communications Manager, Nordics, at Facebook in an email.

But even after we received this response, there were lots of groups left for people with an interest in the sexual abuse of children, and it’s still possible to like such interests openly.

No one at Facebook wants to tell us why.

Another question that never received an answer is why Facebook’s algorithms match users with a sexual interest in children with young girls in Asia.

By registering a new user profile, a 30-year-old Swedish man who only likes groups and pictures with an erotic content, we managed to open a door that converted the usually pretty wholesome Facebook feed that we are so used to seeing into a virtual red light district.

As interests, our fake profile listed “children”, “pornography” and “child pornography”. Facebook’s algorithms captured these words – and invitations started to arrive.

Whatever we wrote in the search field, we kept seeing young Asian girls. More or less all of them had provocative profile pictures, alluding to sex. Some of them didn’t even try to hide the fact that they get undressed for money. For example, if we entered the letter “P” in the search box, we were shown a profile called “PT Show Sex”. PT is an abbreviation for “Pre Teen”, i.e. a child below the age of 13.

“You need to understand one thing: algorithms are value-neutral. If someone is searching for illegal content, the algorithm will be helpful and guide the user to more relevant illegal content,” explains Dhiraj Murthy, Associate Professor at the University of Texas at Austin.

He is one of few scientists studying social algorithms. Among other things, he has investigated IS’ propaganda channels on YouTube. SvD and Aftonbladet has initiated an email conversation with him to elucidate how come Facebook recommended a 30-year-old from Sweden to befriend young Asian girls.

“Facebook has access to a lot of data and gives your recommendations, not only based on what you and your friends are liking, but also on what ‘people like you’ are liking.

The same mathematical formula that has made Facebook so successful – guessing what you like before you are aware of it yourself, and giving you more of the same thing – also seems to encourage adult men to contact children and young people undressing in front of the camera.

“The algorithm can’t determine whether it’s doing something illegal. It’s only doing what it’s been programmed to do,” Murthy explains.

Thomas Andersson of Ecpat, who works with the prevention of sexual exploitation of children, says that the organisation has heard talk about Facebook groups for paedophiles.

“These days, it’s easy for abusers to find other abusers. You could say that they have gone from being lone individuals to being part of large global communities. They adapt quickly to most preventative measures, thanks to the way the Internet is constructed: there is always some site where you can meet up, and the means of encryption and anonymisation are widely available today; it’s user friendly and sometimes built into the products right from the start.”

He doesn’t know of any cases where Swedish men have approached foreign children on Facebook. But that doesn’t mean it’s not happening.

“In our experience – if there is an opportunity for abuse, it will happen.”

Swedish version/svensk version: Sexuella övergrepp mot barn sprids på Facebook

Mark Malmström, SvD, Jani Pirttisalo, SvD, Lisa Röstlund, Aftonbladet, Joachim Kerpner, Aftonbladet

Contact: anvandarna@schibsted.se